By Larry Neumeister

NEW YORK (AP) — It only took a few seconds for a New York Court of Appeals judge to realize that the man addressing a video screen (who is trying to present arguments in the case) not only had no law degree, but never existed at all.

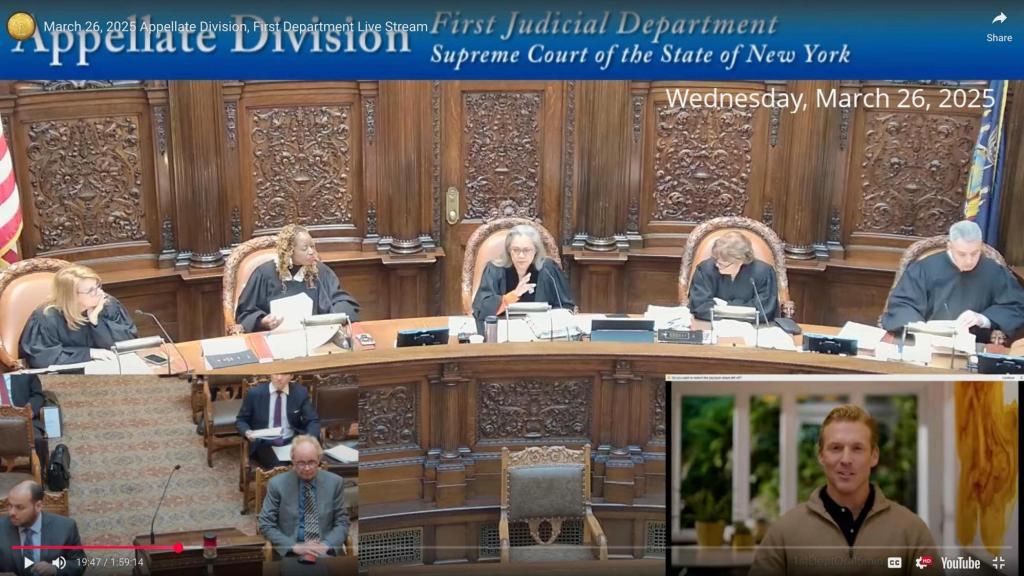

The latest strange chapter in the troubling arrival of artificial intelligence in the legal world unfolded on March 26th under the stained glass dome of the first judicial division of the Appeals Division of the New York Supreme Court. There, a panel of judges was set up to be heard by Jerome Dewald, plaintiff in the employment dispute.

“The appellant submitted a video for his argument,” said Judge Sally Manzanette Daniels. “Okay. I’ll listen to that video now.”

On the video screen, a young man was seen with a smile with a sculpted hairstyle, a button-down shirt and a sweater.

“Make your coat happy,” the man began. “I am today a humble professional before five prominent panels of justice.”

“Okay, wait,” Manzanette Daniels said. “Are you advised in that case?”

“I generated it. It’s not a real person,” replied Dewald.

In fact, it was an avatar generated by artificial intelligence. The judge was not satisfied.

“When you created your application, you would have been happy to know that you didn’t tell me that sir,” Manzanette Daniels said before yelling out the room to stop the video.

“I’m not grateful for being misunderstood,” she told Dewald before continuing his argument.

Dewald later wrote an apology to the court, saying he had no intention of harm. He had no lawyer to represent him in the case, so he had to present his legal argument. He then felt that the avatar could provide his presentation without having to trip over his usual tweets, stumble or stumble.

In an interview with the Associated Press, Dewald said he applied to the court for permission to play pre-recorded videos and then used the product that was created by a San Francisco technology company to create an avatar. Originally, he tried to generate a digital replica that looked like him, but was unable to achieve it before the hearing.

“The court was really upset about it,” Dewald admitted. “They bit me a lot.”

Even real lawyers got into trouble when the use of artificial intelligence failed.

In June 2023, two lawyers and the law firm were fined $5,000 by a federal judge in New York after using AI tools to conduct legal investigations and cited a fictitious legal case composed by a chatbot. Officials said they made a “honest mistake” in not understanding that artificial intelligence might make up for things.

Later that year, the more fictitious court decision invented by AI was cited in legal documents filed by lawyers for Michael Cohen, a former personal attorney for President Donald Trump. Cohen took responsibility for saying that the Google tools he used for legal investigations didn’t realize that so-called AI hallucinations were possible.

These were incorrect, but last month the Arizona Supreme Court began deliberately using avatars generated in two AIs that summarise court decisions for the public, similar to those used by Dewald in New York.

The court website says that the avatars going to “Daniel” and “Victoria” are there to “share that news.”

Daniel Singh, adjunct professor and assistant director of the Law and Court Technology Center at William & Mary Law School, said he was not surprised to learn that Dewald would introduce a fake person to discuss the appeal case in a New York court.

“From my point of view, that was inevitable,” he said.

He said it is unlikely that lawyers would do anything like that because of tradition and court rules and because they could be slaughtered. However, he said individuals who appear without a lawyer and request permission to address the court are not normally given instructions on the risk of presenting the case using synthesized videos.

Dewald recently heard a webinar sponsored by the American Bar Association, which discussed the use of AI in the legal world, and said he was trying to catch up with technology.

As for Dewald’s case, it was still pending before the Court of Appeal as of Thursday.

Original issue: April 4, 2025, 5:02 PM EDT