However, due to lack of reference materials and cultural sensitivity, the project may take longer than expected.

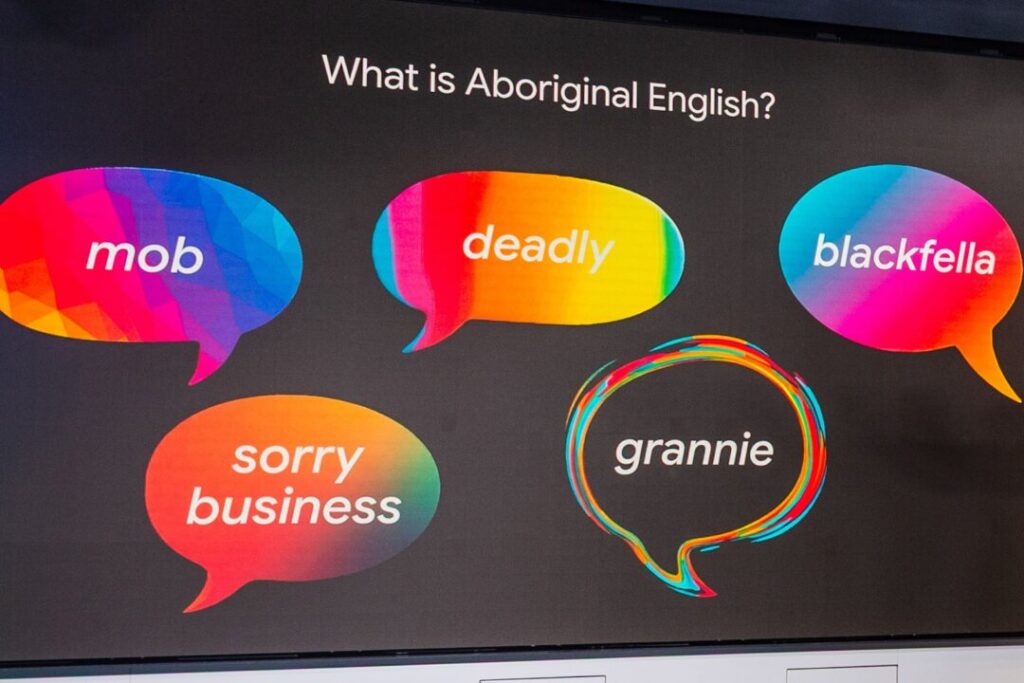

Aboriginal and Torres Strait Islander people are excluded from techniques that do not understand the way they speak, the terms they use, or the cultural significance behind them.

However, a multi-year project with researchers at the University of Western Australia will seek to improve access to a variety of technologies by training artificial intelligence models in Aboriginal English.

The Internet giant revealed the project on February 19th with its latest investment from Australia’s digital future initiative $1 billion, announced in 2021.

However, the project plans to add Aboriginal English to Google services in mid-2026, but may take longer than expected due to lack of reference materials and cultural sensitivity.

Despite lack of recognition through AI or speech recognition technology, University of Washington Officer Lecturer Grenie’s Collado said he started an Aboriginal English-speaking school where most Indigenous children learned from home. Ta.

The powerful language was characterized by a variety of grammatical structures in Australian English and various meanings and cultural references that are often unrecognised, Nyungal scholars said.

“People are not being served throughout the system,” she told AAP.

“I want to give my mob the option to choose and use technology.”

However, capturing the nuances of Aboriginal English will be complicated, says Celeste Rodriguez Rouro, an associate professor at the University of Washington, who says that, as linguists have usually reached a dictionary or set of grammar rules, It was stated as a step.

“Like we think about mainstream English types, there is no standard language ideology that applies to Aboriginal English,” she said.

“We need to be aware of what we are imposing from our own ideology, which we carry as speakers in multiple languages.”

The storytelling sessions the university’s language lab used to capture Aboriginal English earlier is not suitable for the project, she said.

“Things changed with the work we were trying to do for Google… because we didn’t think our structured yarn sessions were culturally safe,” she said. .

Instead, she said the university would recruit and train Indigenous research assistants in various parts of Australia to capture Aboriginal English speakers’ responses to questions and stimuli.

When a recording is recorded, it could be added to a variety of services, according to Google research scientist Ben Hutchison. This includes AI-powered voice assistants in your car and mobile phone, text message dictations and YouTube video captions.

“Ideally, everyone should be able to talk to AI systems in a natural way, but AI systems haven’t always come across different ways of speaking before,” says Hutchison.

“Previous research suggests that in reality, if you come from an underrated or minorized group (and) your AI system doesn’t understand you.

The languages collected through the research project will be reviewed by Indigenous Advisory Committee before they become available to Google, he said, and the technology will be evaluated and tested before launch.